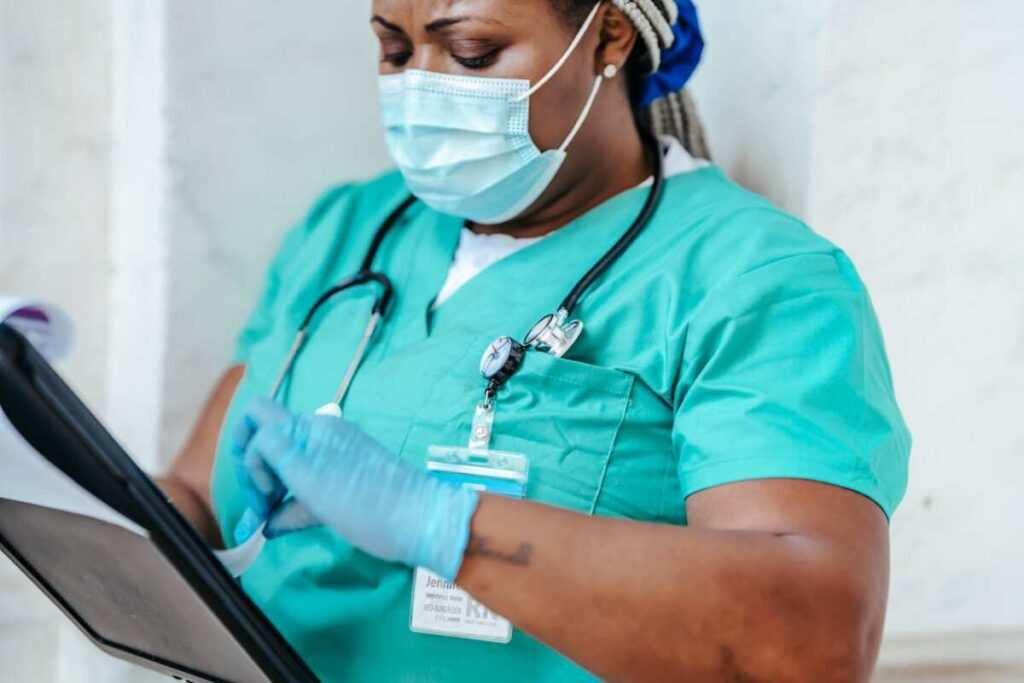

Modern medicine relies on software for almost every step of patient care. Doctors and nurses trust technology to keep accurate records and monitor vitals. A single glitch can disrupt an entire hospital wing in seconds.

Understanding why these crashes happen is the first step toward better safety. Technical literacy is now just as required as clinical skill. Healthcare workers must be ready for the moment the power goes out.

The Growing Dependence On Digital Tools

The push for more digital tools shows no signs of slowing down soon. 90% of top executives used more digital tools in 2025. Such leaders anticipate these changes will make a major impact on how clinics function. Most managers see automation as a way to handle more patients at once.

Hospital staff use digital dashboards to track medication doses and heart rates. Many doctors now use tablets to write notes during a checkup. Devices send information directly to a central server for storage.

Legal Protections For Impacted Patients

Patients have the right to receive care that meets a professional standard. Experienced legal professionals like Moore Law medical malpractice attorneys assist families when technical errors result in serious injury or loss. Legal guidance determines if a software bug or a human error caused the harm.

Courts look at whether the hospital maintained the equipment properly. Victims deserve answers when a computer screen goes dark at the wrong moment. Technology should help people heal, not create new dangers. Filing a claim can help highlight systemic issues that need fixing.

The Risk Of Cascading System Failures

Networks in hospitals may be connected in complex ways. A report from a safety group explained how digital outages rarely stay in just one area. Problems spread and trigger failures across many different departments at the same time. Such a situation creates a chain reaction that stops nurses from doing their daily work.

When the pharmacy system goes down, the nursing station might lose access to patient files, too. It is difficult to isolate a bug when everything is linked together. Systems need better walls between their parts to prevent total blackouts.

How Technical Glitches Affect Patients

When systems go down, the quality of medical care drops immediately. Data from a privacy news outlet showed that 95% of people surveyed felt patient care suffered from system issues. Problems happen regularly and block staff from seeing the records they need. Delays in looking up data can cause serious mistakes in the emergency room.

Staff members have to guess about past allergies or surgeries. A lack of information puts lives at risk during a crisis. Clinicians need real-time access to help their patients the right way.

Why Software Errors Occur In Medicine

Coding errors are not always the fault of the person writing the lines of text. Government guidelines recently mentioned that software errors may start with incorrect or inconsistent requirements. If the goals for the tool are not clear, the final product will have bugs.

Designers must understand exactly what a doctor needs before they start building. Communication between developers and medical experts is poor. A program might work in a lab but fail in a busy hospital. Better planning at the start will result in fewer crashes later.

Strategies For Improving System Reliability

Keeping a hospital running requires more than just buying the latest gadgets. Teams must work together to find weak spots in their current setup.

- Regular testing helps catch bugs before they cause a real crisis.

- Training staff on backup plans makes sure they know what to do during a blackout.

- Updating hardware prevents older machines from slowing down new programs.

- Monitoring network traffic can alert IT teams to a crash before it happens.

Staff members should feel comfortable reporting even small glitches to their managers. Fixing a minor lag today prevents a total crash next month. Reliability is a team effort for everyone in the clinic.

Training For Technical Emergencies

Medical teams practice for physical emergencies like heart attacks or fires. They should likewise practice for what happens when the computer screen goes blank. If a nurse cannot see a patient’s chart, there must be a paper backup ready. Having a clear plan reduces the panic that happens during a digital outage.

Emergency drills should include a section on technology failure. Knowing who to call for IT support saves precious minutes. Preparedness is the only shield against uncertainty in the ward.

Technology will continue to be a part of every hospital visit. Even as these tools offer many benefits, the risks of failure are always present. Patients deserve to know that the machines keeping them alive are working correctly.

Staying informed about system problems helps everyone push for safer healthcare environments. Constant vigilance is the best way to protect people from digital errors. Safety is the highest goal in every clinic.